We get the question all the time… “Can CloudBolt move my VMs from my private cloud to Amazon... or from Amazon to Azure?"

The answer is the same. “Sure, but how much time do you have?”

Cloud-based infrastructures are revolutionizing how enterprises design and deploy workloads, enabling customers to better mange costs across a variety of needs. Often-requested capabilities like VM migration (or as VMware likes to call it, vMotion) are taken for granted, and increasingly customers are interested in extending these once on-prem-only features to help them move workloads from one cloud to another.

At face value, this seems like a great idea. Why wouldn’t I want to be able to migrate my existing VMs from my on-prem virtualization direct to a public cloud provider?

For starters, it’ll take a really long time.

VM Migration to the Cloud

Migration is the physical relocation (probably a better term) of a VM and it’s data from one environment to another. Migrating an existing VM to the cloud requires:

- Copying of every block of storage associated to a VM.

- Updating the VM’s network info to work in the new environment.

- Lots and lots of time and bandwidth (See #1).

Let’s assume for a minute that you’re only interested in migrating one application from your local VMware infrastructure to Amazon. That application is made up of 5 VMs, each with a 50GiB virtual hard disk. That’s 250 GiB of data that needs to be moved over the wire. (Even if you assume some compression, you will see below how we're still dealing with some large numbers).

At this point, there is only one question that matters: how fast is your network connection?

|

Transfer Size (GiB)

|

Upload speed (Mb/s)

|

Upload Speed (MB/s)

|

Transfer Time (Seconds)

|

Transfer Time (Hours)

|

Time required (Days)

|

|---|

|

250

|

1.5

|

0.1875

|

10,922,667

|

3,034.07

|

126.42

|

|

250

|

10

|

1.25

|

16,38,400

|

455.11

|

18.96

|

|

250

|

100

|

12.5

|

163,840

|

45.51

|

1.90

|

|

250

|

250

|

31.25

|

65,536

|

18.20

|

0.76

|

|

250

|

500

|

62.5

|

32,768

|

9.10

|

0.38

|

|

250

|

1000

|

125

|

16,384

|

4.55

|

0.19

|

|

250

|

10000

|

1250

|

1,638

|

0.46

|

0.02

|

The result from this chart is clear: the upload speed of your Internet connection is the only thing that matters. And don’t forget that cloud providers frequently charge you for that bandwidth, so your actual cost of transfer will only be limited by how much data you’d like to upload.

Have more data to migrate? Then you need more bandwidth, more time, or both.

If you want to do this for your entire environment, note that you’re effectively performing SAN mirroring. The same rules of physics apply, and while you can load a mirrored rack of storage on a truck and ship it to your DR site, most public cloud providers won’t line up to accept your gear.

The Atomic Unit of IT is Workload, Not the VM

When customers ask me about migrating VMs, they typically want to run the same workload in a different environment—either for redundancy, or best-fit, etc. If it’s the workload that’s important, why migrate the entire VM?

Componentizing the workload can take work, but automating the application deployment with tools such as Puppet, Chef, or Ansible will make it much easier to deploy that workload into a supported environment.

Redeployment, Not Relocation

If migrating whole stacks of VMs to the cloud isn’t practical, how does an IT organization more effectively redeploy workloads to alternate environments?

Workload redeployment requires a few things:

- Mutually required data must be available (i.e. database, etc.);

- A configuration management framework available to each desired location, or

- Pre-built templates that have all required components pre-installed.

I won’t spend the time here talking through all of these points in detail, but I will say that any of these options requires effort. Whether you’re working to componentize and automate application deployment and management in a CM/automation tool, or re-creating your base OS image and requirements in various cloud providers, you’re going to spend some time getting the pieces in place.

A possible alternative to VM migration is to deploy new workloads in two places simultaneously, and then ensure that needed data and resources are mirrored between the two environments. In other words, double your costs, and incur the same challenges with data syncing. This approach likely only makes sense for the most critical of production workloads, not the standard developer.

Ultimately, Know Thy Requirements

It seems as though the concept of cloud has caused some people to forget physics. Although migrating/relocating existing VMs to a public cloud provider is an interesting concept, the bandwidth required to effectively accomplish this is either very expensive, or simply not available. Furthermore, VM migration to a public cloud assumes that the performance and availability characteristics of the public cloud provider are the same or better than your on-prem environment… which is a pretty big assumption.

While there are some interesting technologies that are helping with this overall migration event, customers still need to do the legwork to properly configure target environments and networks, not to mention determine which workloads can be effectively moved in the first place. Technology alone cannot replace sound judgment and decision making, and the cloud alone will not solve all of your enterprise IT problems.

And don’t forget that IT governance in the public cloud is much more important than it is in your on-prem environment, because your end users are unlikely to generate large cost overruns when deploying locally. If you don’t control their access to the public cloud, you will eventually get a very rude awakening when you get that next bill.

Want Some Help?

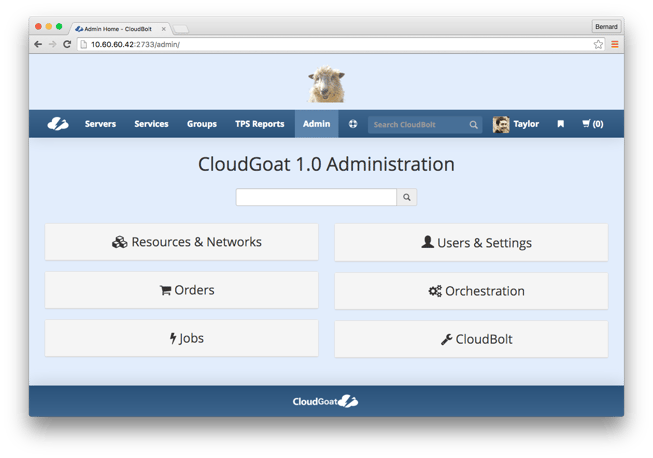

So how does CloudBolt actually satisfy this need? We focus on redeployment and governance. One application, as provided by a CM tool, can be deployed to any target environment. CloudBolt then allows you to define multi-tiered application stacks that can be deployed to any capable target environment. Your users and groups are granted the ability to provision specific workloads/applications into the appropriate target environments, and networks. And strong lifecycle management and governance ensures that your next public cloud provider bill won’t break the bank.

Want to try it now? Let us set you up a no-strings-attached demo environment today.

Part of the CloudBolt team at Red Hat Summit 2013. Sales Director Milan Hemrajani took the picture.

Part of the CloudBolt team at Red Hat Summit 2013. Sales Director Milan Hemrajani took the picture.