In our latest release, we've continued enabling IT organizations that want to provision and manage their applications more effectively. CloudBolt v4.6 makes providing self-service IT access to applications easier than ever, regardless of whether that application resides on a single server, or is a complete end-to-end stack of servers deployed across several environments. Like never before, users can interactively request entire stacks with just a few clicks, and deploy those stacks into any one of the dozen or so supported cloud and virtualization platforms.

We haven’t stopped there, though. In addition to streamlining the application provisioning process, we’ve put a significant amount of effort into other areas of CloudBolt as well.

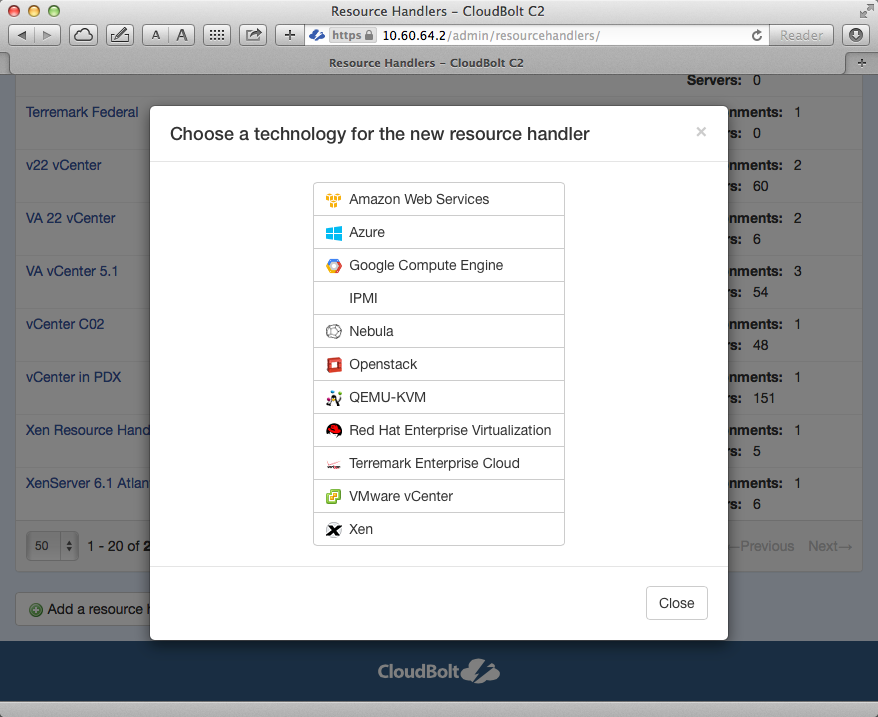

Nebula

We're also proud to announce an all-new connector for Nebula Private Cloud environments.

With this new connector, CloudBolt customers gain the ability to deploy into and manage servers, applications, and even entire services in Nebula-backed environments. Nebula private cloud customers using CloudBolt gain immediate access to all of CloudBolt's features in both new and existing environments:

- Chargeback and Showback

- Reporting

- Governance

- Automated provisioning and management

- Lifecycle management

- Software license management

- And more

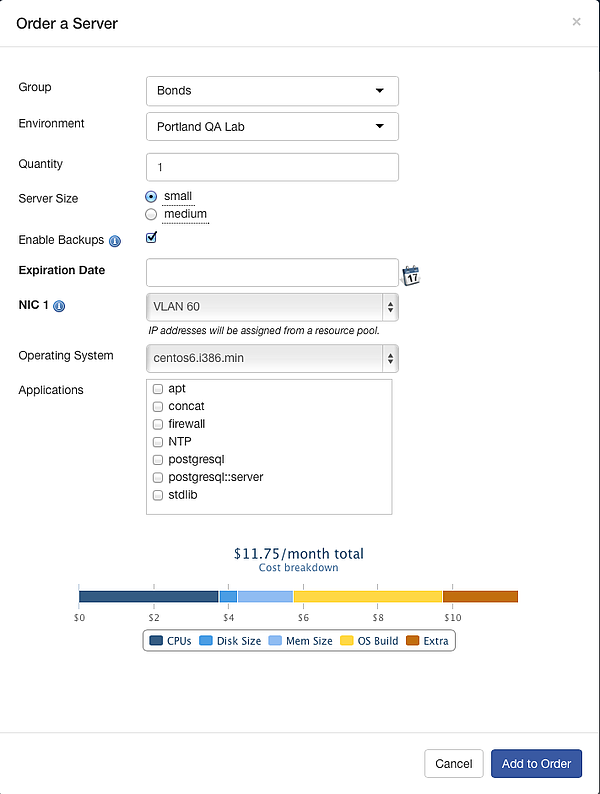

Service Catalog

Customers are using the CloudBolt Service Catalog to provide end users self-service access to entire application stacks for some time now. In v4.6, we've updated the service creation process to make it even more straightforward. Just as they can do for the single server ordering process, admins can alter how the service ordering process looks for different end users and deployment environments. End users can be prompted to enter specific information as necessary based on their desired target deployment environment.

Once ordered by a user, CloudBolt’s built-in approval mechanism can be leveraged for additional validation before CloudBolt steps through any number of automated processes required for delivery of a fully functional application stack.

The end result is clear: CloudBolt administrators can quickly create new service offerings that are able to span any supported target environment. Regardless of your platform of choice, CloudBolt can deliver a complete stack to your end users, and in less time than you think.

Active Directory Group Mapping

Are you using one or more AD environments to authenticate CloudBolt users? In v4.6, admins gain the ability to map AD groups to CloudBolt groups. This AD group mapping also works with multiple AD environments, so if you're using CloudBolt in a multi-tenant capacity, you can still pick-and-choose how auth is handled for each tenant.

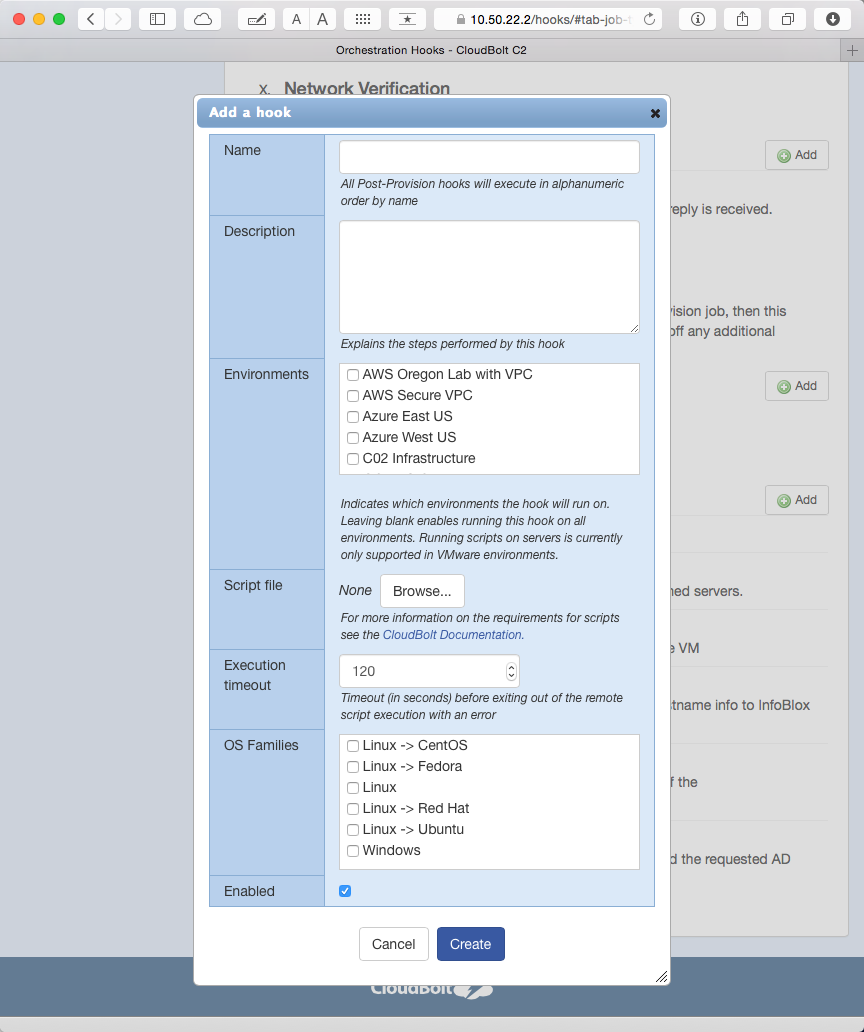

Orchestration Hooks

Orchestration Hooks enable IT administrators to automate every step needed to deliver IT resources and applications to end-users. Extending on this capability, we've added a new Orchestration Hook type that enables the execution of an arbitrary remote script.

This further extends CloudBolt’s lead as the most powerful cross-platform application deployment and management platform, as it can now be seamlessly integrated into nearly any manually-scripted provisioning and management process. Reusing your existing IP has never been easier or faster.

Connector Improvements

Discovering and importing current state from existing environments is a CloudBolt Cloud Management key strength. In v4.6, this is even more thorough, as we now also detect all disk information from VMware vCenter virtual machines as well as AWS AMI, and Microsoft Azure public cloud instaces.

Have a lot of VMs? Users in environments with tens of thousands of VMs will be happy to learn that VM discovery and sync is more efficient and faster.

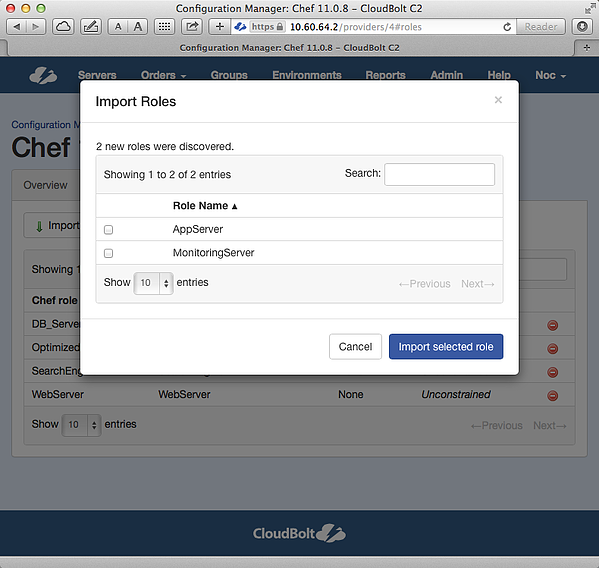

Puppet Enterprise users can now also leverage multiple Puppet environments rather than the default "Production". This can further help customers simplify their IT environments.

Get It Now

The CloudBolt Cloud Manager v4.6 is available today via the CloudBolt support portal. Our updates are just another feature, and take mere minutes to complete.

Don't have CloudBolt yet?

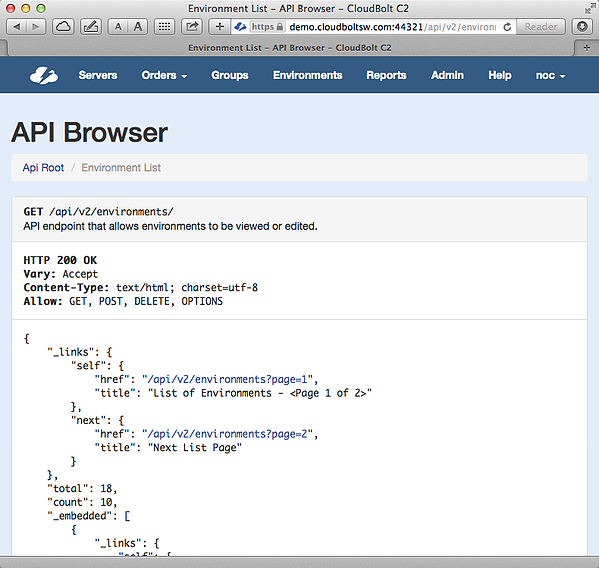

C2's built-in API browser greatly aides development against the new C2 API v2

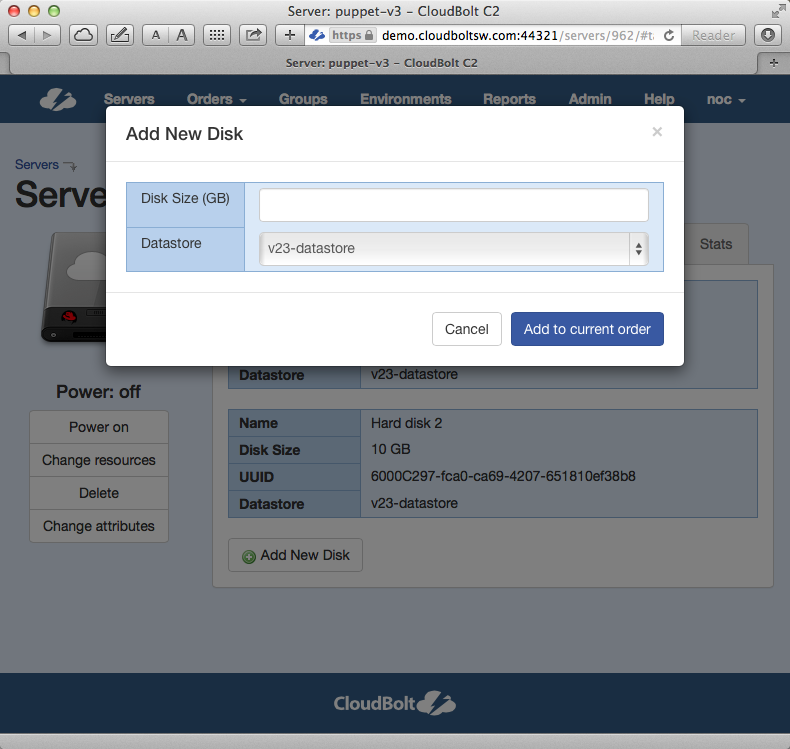

C2's built-in API browser greatly aides development against the new C2 API v2 Users can add additional virtual disks to VMware VMs

Users can add additional virtual disks to VMware VMs

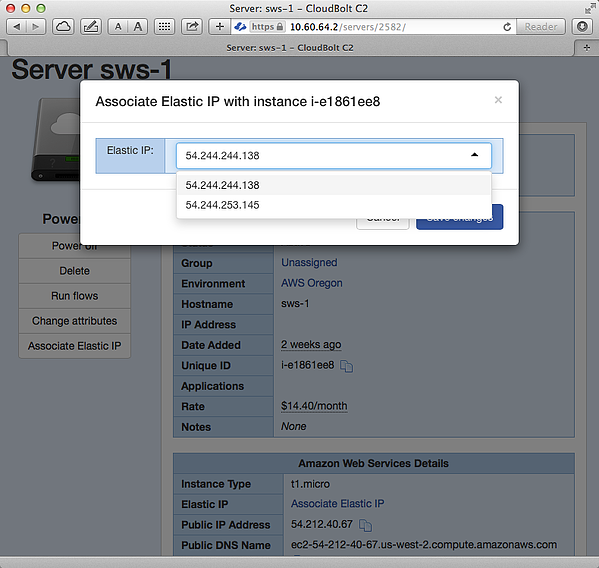

Users can now select and associate AWS Elastic IP addresses from within C2.

Users can now select and associate AWS Elastic IP addresses from within C2.