In numerous conversations with customers and analysts it has become clear that a consensus across the industry is that Cloud Managers are as game changing for IT as server and network virtualization themselves. Among those looking longer term at the promise of Cloud Computing (Public, Private and Hybrid), it is clear that the Cloud Manager will become the keystone of value. Many people’s opinion is that Cloud Managers are the initiator of next major wave of change in IT environments. How? Well let’s look to the past to predict the future.

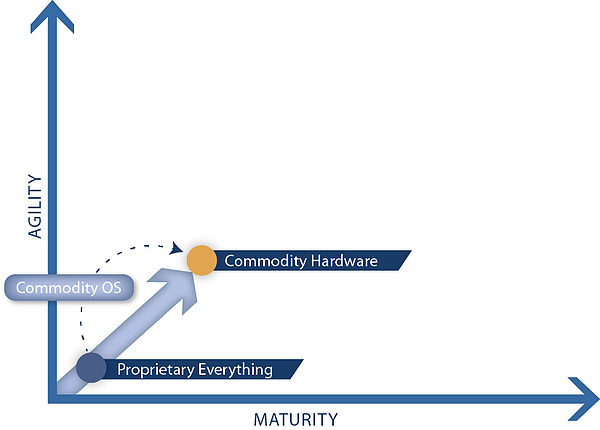

Proprietary Everything

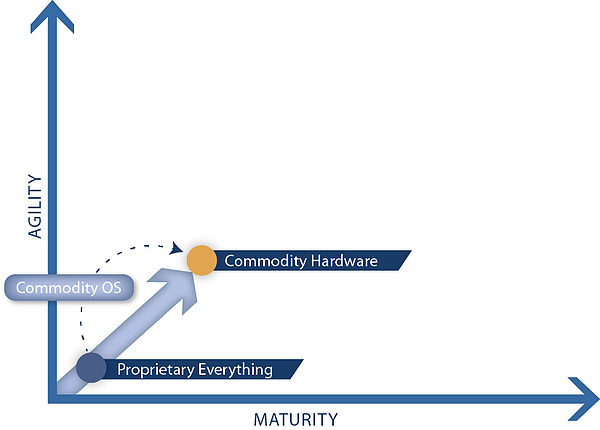

Back in the early 80’s, general purpose computers were first spreading across the business environment. These systems were in the form of fully-proprietary Mainframes, Minicomputers. The hardware (CPU, Memory, Storage, etc.), Operating Systems and any even any available software were all from the specific computer manufacturer (vendors included DEC, Prime, Harris, IBM, HP, DG amongst others). Businesses couldn’t even acquire compilers for their systems from a third party. They were only available from the system’s manufacturer.

Commodity OS leads to Commodity Hardware

The advent of broad interest and adoption of Unix started a sea change in the IT world. As more hardware vendors supported Unix it became easier to migrate from one vendor’s system to another. Additionally, vendors began building their systems based on commodity x86-compatible microprocessors as opposed to building proprietary CPU architectures optimized around their proprietary OS.

Architecture-compatible hardware not only accelerated the move to commodity OS (Unix, Linux and Windows), but in turn, increased pressure on vendors to fully commoditize server hardware. The resulting commoditization of hardware systems steeply drove down prices. To this day, server hardware largely remains a commodity.

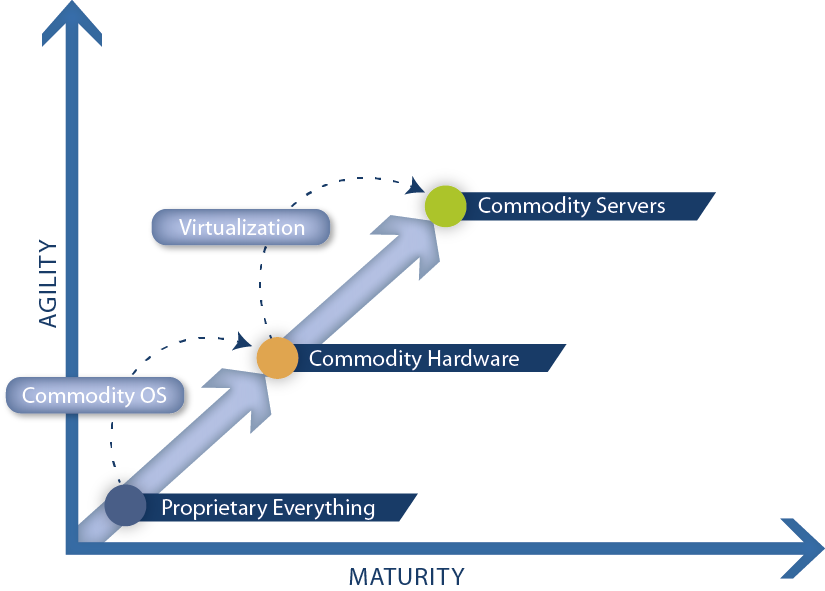

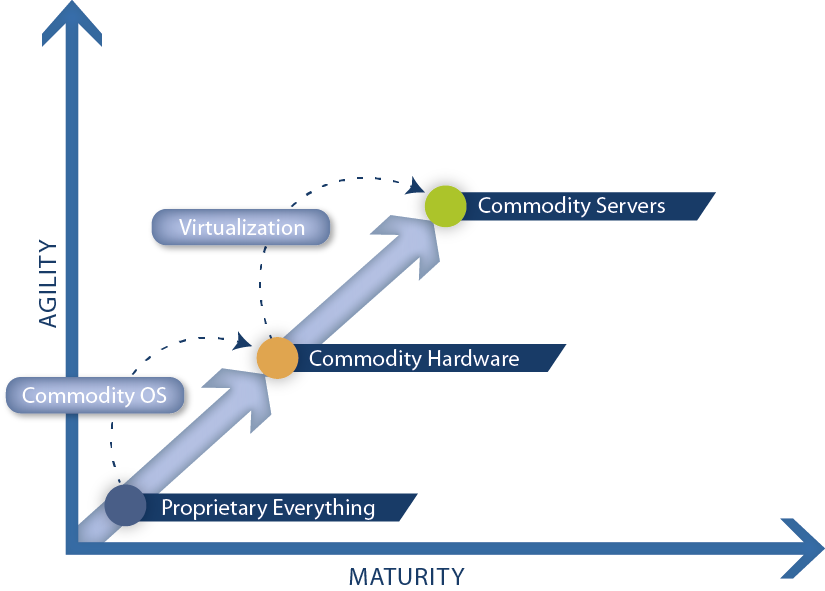

Virtualization Commoditizes Servers

Despite less expensive commodity operating systems and commodity hardware, modernizing enterprise IT organizations were still spending large sums on new server hardware in order to accommodate the rapidly growing demand of new applications. In large part, IT organizations had a problem taking full advantage of the hardware resources they are spending on. Server utilization become a real issue. Procurement of servers still took a considerable amount of time due to organizational processes. Every new server required a significant amount of effort to purchase, rack and stack, and eventually deploy. Power and cooling requirements became a significant concern. The integration of storage, networking, and software deployment and maintenance still caused considerable delays into workflows that are reliant on new hardware systems.

Server virtualization arrives commercially in the late 1990’s and starts getting considerable traction in the mid 2000’s. Virtualization of the underlying physical hardware provides an answer to the thorny utilization issue by enabling multiple individual server workloads that have low individual utilization to be consolidated on a single physical server. Virtualization also provides a limited solution for the the procurement problem, and helps with the power and cooling issues posed by rampant hardware server growth. Areas of networking, storage, and application management remain disjointed, and typically still require similar times to effectively implement as before the advent of virtualization thus becoming a major impediment to flexibility in the enterprise IT shops.

Now we find ourselves in 2013. Most enterprise IT shops have implemented some level of virtualization. All of the SaaS and Cloud-based service providers have standardized on virtualization. Virtual servers can be created rapidly and at no perceived cost other than associated licenses, so VM Servers are essentially a commodity, although the market share for the underlying (enabling) technology is clearly in VMware’s favor at this point.

The problem with these commodity VM servers is that making them fully available for use still hinges on integrating them with other parts of the IT environment that are far from commodity and complex to configure. The VM’s dependency on network, automation tools, storage, etc. hinder the speed and flexibility of the IT group to configure and provide rapid access to these resources for the business.

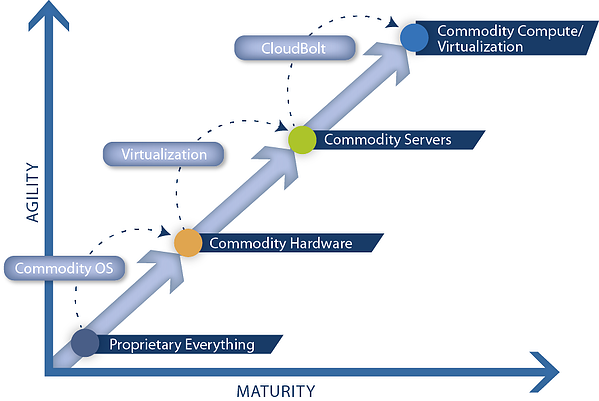

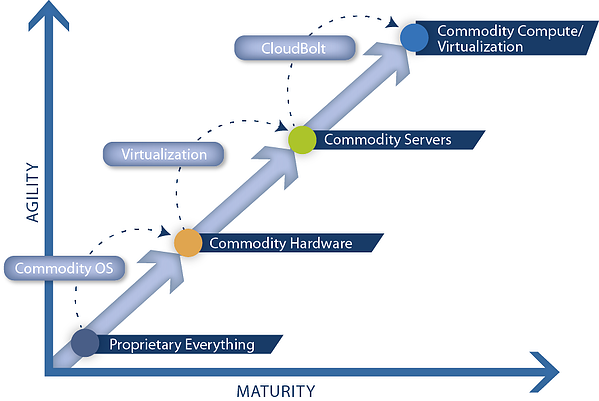

Network Virtualization arrives

A huge pain point in flexibly deploying applications and workloads is the result of networking technology still being largely based on the physical configuration of network hardware devices across the enterprise. The typical enterprise network is both complex and fragile, which is a condition that dos not encourage rapid change in the network layer to accommodate business or mission application requirements. An inflexible network which is available is always preferred to a network that failed because of unintended consequences of a configuration change.

In much the same way as Server Virtualization abstracted the server from the underlying hardware, Network virtualization completely abstracts the logical network from the physical network. Using network virtualization it is now possible to free the network configuration from the physical devices, enabling rapid deployment of new, and more efficient management of existing virtual networks. Rapid adoption of network virtualization technology in the future is all but guaranteed.

Commoditizing all IT resources and compute

With both network and server virtualization, we are closer than ever to the real benefit of 'Cloud Computing': the promise of fully commoditized IT resources and compute. To get there, however, we need to coordinate and abstract the management and control the modern enterprises’ internal IT resources and compute resources being consumed in external public cloud providers.

To enable rapid and flexible coordination of the IT resources, the management of those enterprise application resources must be abstracted from the underlying tools. The specific technologies (server virt, network virt, automation, storage, public cloud provider, etc.) involved are viewed as commodity, and can be exchanged or deprecated without negatively affecting the business capabilities of the enterprise IT. Additionally this abstraction allows the IT organization to flexibly adopt new and emerging technologies to add functionality and capability without exposing the business to the often sharp edges leading edge technology.

The necessary resource abstraction and control is the domain of the not just the virtualization manager-- but really the Cloud Manager. In short, the Cloud Manager commoditizes compute by commoditizing the IT resources across the enterprise and beyond.

With such an important role it is no wonder that every vendor wants to pitch a solution in this space. The orientation or bias of the various vendors’ approaches in developing a Cloud Manager for enterprise IT will play a critical role in the ultimate success of the products and customers that implement them.