I love the history of technology. My favorite place in Silicon Valley is the Computer History Museum. It’s a living timeline of computing technology, where each of us can find the point when we first joined the party.

It’s great to learn about technology pioneers – the geek elite. Years ago I took a course on computer operating systems. We were studying the evolution of UNIX, and we’d gotten to Lions’ Commentary on UNIX 6th Edition, circa 1977. (As an aside, the entire UNIX operating system at that time was less than 10,000 lines of code. By 2011 the Linux kernel alone required 15 million lines and 37,000 files.) As we studied the process scheduler section, we came to one of the great “nerdifacts” of computer programming, line 2238, a comment which reads:

* You are not expected to understand this.

That one line perfectly expresses my joys and frustrations with computing. The joy comes from the confirmation that computers can do amazingly clever things. The frustration is from the dismissive way I’m reminded of my inferiority. And I think that sums up how most people feel about technology.

“Your call is important to us. Please continue to hold.”

In the corporate world, end users have a love-hate relationship with their IT departments. It’s true that they help us to do our jobs. But rather than giving us what we need, when we need it, our IT folks seem to always be telling us why our requests cannot be fulfilled. Throughout my career I’ve been on both sides of this conversation. Early on, I was the requester/supplicant who’d make my pleas to IT for services or support, only to be told to go away and come back on a day that didn’t end in ‘y’.

Later, I was the IT administrator, then manager. In those roles I was the person saying ‘no’ – far more often than I wanted. It wasn’t because I got perverse pleasure out of disappointing people. That was just the way my function was structured, measured, and delivered.

Almost without exception, the two metrics that drove my every action in IT operations were cost and uptime. Responsiveness and customer satisfaction were not within my charter. Simply put, I got no attaboys for doing things quickly. While this certainly annoyed my customers, they knew and I knew that they had no alternatives.

The Age of Outsourcing

Things began to change in the late 1980’s and early 1990’s (yeah, I go back a ways) when large companies decided to try throwing money at their IT problems to make them go away. So began the age of IT outsourcing, when companies tried desperately to disown in-house computer operations. Such services were “outside of our core competency”, they reasoned, and so were better performed by seasoned professionals from large companies with three-letter names like IBM, EDS, and CSC.

Fast-forward 25 years and we find the IT outsourcing (ITO) market in decline. There are many reasons for this. The most common are:

- Actual savings are often far less than projected

- Long-term contracts limit flexibility, particularly in a field that changes as constantly as IT

- There is an inherent asymmetry of goals between service provider and service consumer

- Considerable effort is required to manage and monitor contracts and SLA compliance

- New technologies like cloud computing offer viable alternatives

Just as video killed the radio star, cloud computing is a fresher, sexier alternative to ITO for enterprises searching for the all-important “competitive advantage”.

Power to the People!

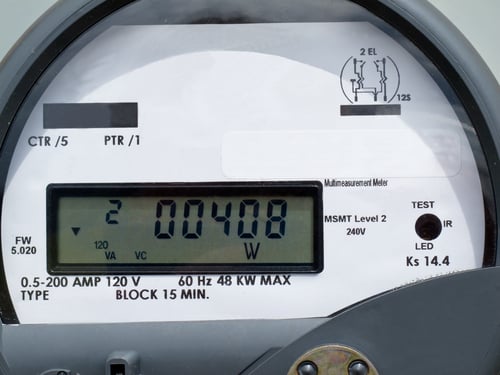

Cloud computing isn’t just new wine in old bottles; it’s a fundamental change in the way computing resources are made available and consumed. Cloud computing focuses on user needs (the ‘what’) rather than underlying technology (the ‘how’).

The National Institute of Standards and Technology (NIST) defines five essential characteristics of cloud computing. One of these is ‘On-demand self-service’. Think about what that means. For the end user, it means getting what we need, when we need it. For business, it means costs that align with usage, for services that make sense. And for IT, it means being able to say ‘yes’ for a change.

For too long, we have been held captive by technology. Cloud computing promises to free us from technology middlemen. It enables us to consume services that we value.

At its core, cloud computing is technology made understandable.

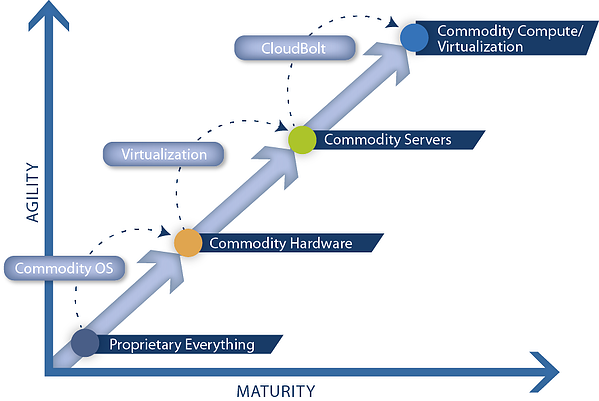

CloudBolt is a cloud management platform that enables self-service IT. It allows IT organizations to define ready-to-use systems and environments, and to put them in the hands of their users. Isn’t that a welcome change?

An assembly-line model cannot deploy VMs as rapidly as needed. Automation is required.

An assembly-line model cannot deploy VMs as rapidly as needed. Automation is required.